Businesses exploring Voice AI often begin with a simple goal: “Make an agent that can talk.”

But the moment you move from a demo environment to real customer interactions, an entirely different set of challenges emerges: infrastructure, latency, routing, orchestration, compliance, and reliability.

At Altegon, we’ve spent time building, auditing, and deploying Voice AI systems for enterprise use cases; customer support, outbound operations, sales automation, logistics coordination, and multi-agent workflows. Across dozens of prototypes and production scenarios, we observed patterns that every B2B team should understand before investing in Voice AI.

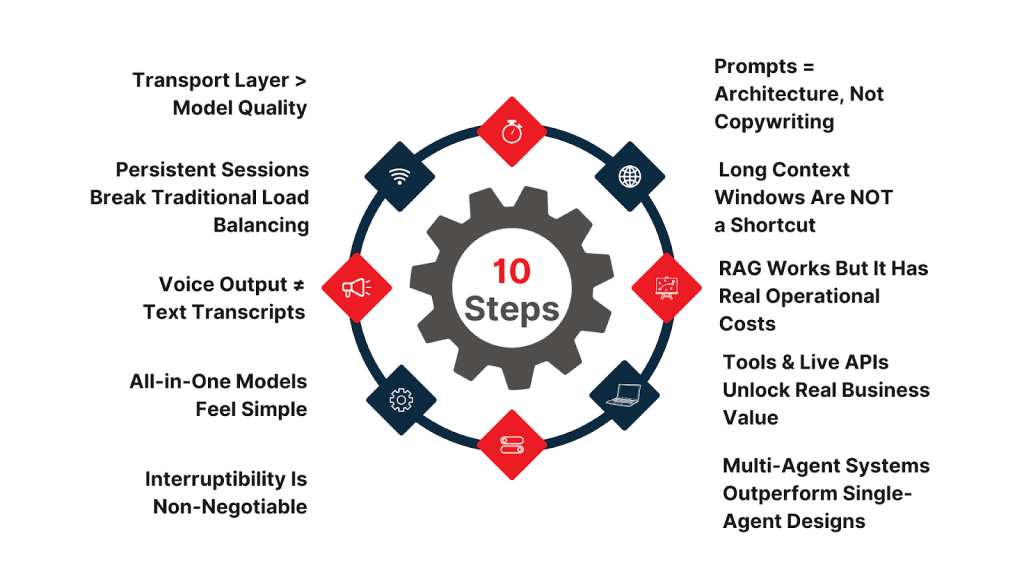

Here are the 10 most important lessons we’ve learned explained in depth and optimized for B2B readers evaluating Voice AI solutions.

1. Transport Layer > Model Quality

Most companies obsess over model choice GPT, Claude, Llama but voice interaction succeeds or fails on latency.

For human-natural conversations, transport protocols matter more:

- UDP and WebRTC deliver ultra-low latency

- WebSockets introduce jitter, buffering, and lag

- Traditional HTTP streaming collapses under real-time loads

In industries like contact centers, healthcare triage, and fleet management, even a 300ms delay breaks user trust. Low latency isn’t an optimization, it’s a requirement.

2. Persistent Sessions Break Traditional Load Balancing

Voice AI agents create long-lived, high-bandwidth sessions.

But enterprise load balancers (round-robin, least-connections) treat each audio packet as a new request, scattering traffic.

This results in:

- dropped connections

- session resets

- inconsistent agent behavior

Altegon solved this with:

- hash-based routing

- Redis-backed session pinning

- node affinity policies for audio streams

Enterprise voice requires network architecture, not just AI architecture.

3. Voice Output ≠ Text Transcripts

A hidden compliance risk:

What the TTS model says is not always what the transcript engine writes.

This can break:

- auditing

- legal review

- healthcare reporting

- financial compliance

To solve this, Altegon uses:

- dual-channel instrumentation

- parallel transcript verification

- audio-first truth sources

This ensures enterprises don’t rely on flawed transcripts.

4. All-in-One Models Feel Simple… Until They Don’t

Voice-to-voice models collapse STT, LLM, and TTS into one block.

Great for demos, terrible for debugging.

Cascaded pipelines enable:

- pinpointing which component failed

- swapping providers (OpenAI → Deepgram → Azure → OpenSource)

- customizing for domain-specific accuracy

- optimizing latency at each layer

For real production reliability, modular beats are monolithic every time.

5. Interruptibility Is Non-Negotiable

Customers interrupt constantly.

Most Audio LLM systems freeze or ignore interruptions because WebSockets don’t handle duplex audio well.

Enterprise-grade agents require:

- true barge-in

- hotword cancellation

- buffer preemption

Without interruptibility, call flows collapse especially in support centers.

6. Prompts = Architecture, Not Copywriting

Prompts aren’t text.

They’re systems design.

Prompts define:

- persona

- safety rules

- escalation logic

- regulatory boundaries

- integration behavior

- memory control

At Altegon, we treat prompts like code:

- version control

- stress tests

- jailbreak audits

- structured evaluation pipelines

This is mandatory for highly regulated sectors (finance, insurance, healthcare).

7. Long Context Windows Are NOT a Shortcut

Stuffing long customer histories into a prompt increases:

- hallucinations

- memory drift

- irrelevant associations

Especially in voice mode, models lose grounding.

The solution isn’t “more context” it’s better data architecture:

- structured memory

- short-term conversational buffers

- task-oriented context blocks

Context must be engineered, not dumped.

8. RAG Works But It Has Real Operational Costs

Retrieval-Augmented Generation boosts accuracy, but it’s not plug-and-play.

Enterprises need:

- continuous ingestion

- document freshness policies

- domain-specific indexing

- vector database monitoring

- region-specific knowledge bases

RAG is a data operations commitment, not a feature toggle.

Budgeting for RAG maintenance is essential.

9. Tools & Live APIs Unlock Real Business Value

A talking agent is a demo.

A tool-enabled agent is a worker.

Real ROI comes when voice agents can:

- schedule appointments

- create tickets

- check inventory

- process payments

- update CRMs and ERPs

- run logistics operations

But stable tool calling requires:

- disabling token streaming

- precise signatures

- retry & reprompt logic

- secure function orchestration

Altegon standardizes this via our Function Orchestration Layer, enabling safe, compliant enterprise actions.

10. Multi-Agent Systems Outperform Single-Agent Designs

One agent cannot handle:

- conversation

- data lookups

- compliance checks

- error handling

- outbound actions

- telephony orchestration

Splitting responsibilities across coordinated micro-agents yields:

- higher accuracy

- lower latency

- cleaner debugging

- safer execution

Altegon dynamically spins up agents per task, enabling complex workflows such as:

- multi-step reservations

- healthcare triage

- insurance claim intake

- logistics routing

- enterprise onboarding flows

This is where enterprise Voice AI moves from automation → orchestration → intelligence.

Read More : 10 Factors to Consider Total Cost of Ownership (TCO) of Video Communication Platforms

Wrapping Lines!

Owning your tech stack is still a frontier; the tools, patterns, and best practices are still evolving. That’s exactly why now is the time to build while the space is still forming, while there’s still room to design systems that actually work.

And honestly? We’re just getting started. Every time we think we’ve optimized a system, we build the next layer and discover entirely new opportunities and challenges we didn’t anticipate.

So the most important lesson is: build it. Break it. Rebuild it. Own it.

Ready to take control of your infrastructure and build a system that scales? Starting today!